The AI-volution: Redefining Data Security and Privacy

Posted by HANDD Admin on 7th October 2024

Artificial Intelligence (AI) has dominated the headlines in recent times, as governments and businesses the world over grapple with how to adopt and benefit from this evolving technology. It has been predicted that the adoption of AI and the efficiencies it delivers will allow world GDP to potentially double or even triple in size by the year 2030.

In relation to information security, AI brings a multitude of benefits such as advanced capabilities for detection, carrying out audits to monitor compliance and taking proactive security measures by mimicking some of the tasks a human being would perform. This level of machine learning in cyber security toolsets is actually nothing new. By leveraging AI technology effectively, businesses can strengthen their defences and protect sensitive data from evolving cyber threats.

While there are clear benefits to adopting AI models into your technology stack, like any emerging or new technology, doing so is not without risk. AI is transforming the journey of our data and, with it, the methods we need to adopt to keep it safe. The key here is not to get blind-sided by the benefits without fully understanding and building a robust armoury to tackle the threats and manage the risks.

WHAT IS ARTIFICIAL INTELLIGENCE?

AI is the term applied to the suite of technologies that attempts to simulate human-level intelligence and carry out problem-solving capabilities, performing tasks and providing outputs that previously would have only been possible with human involvement.

The term ‘AI’ is used to describe the technologies of Machine Learning, Deep Learning, Large Language Models & Generative-AI. Each of these technology types deliver their own benefits. The recent boom in Generative AI using data to generate new content in code, text, image, video or audio, likely pose the biggest challenge to our data security.

WHY IS ARTIFICIAL INTELLIGENCE A THREAT TO DATA?

AI platforms work based on the data provided to them. They ‘self-learn’ by identifying patterns in data and use these to provide outputs. This learning process is only possible with some types of data. To learn, the data must be stored and essentially remembered by the model. If the platform has sensitive data sent to it, your organisation loses control of that data and will no longer be able to see, let alone police how that data is being used.

If your data has been exposed to an AI model, there is a very real threat that the AI model could provide that information to another query asked of it. Meaning an identity not necessarily expected to access that information, could ask a question and be presented with sensitive data. This could include queries from people outside of your organisation altogether.

There is also the risk of AI models being subject to Zero Day exploits yet to be discovered. This means bad actors could knowingly exploit published CVEs to extract the data within the models which may have never been sanctioned for the public domain.

As AI technology continues to evolve and is integrated into more tools, so too do the potential threats AI poses to data security. Businesses and those responsible for data security need to continuously adapt their defensive strategies to mitigate these risks effectively.

WHAT IS HAPPENING NOW, AND HOW DOES IT AFFECT YOUR DATA?

As AI grows and finds its way into business and personal applications we use daily, understanding what data is going in and how it’s being used by the algorithm is imperative. More importantly, it needs to be controlled. You need to be aware of where and how your data might become available to others, both inside and outside your company.

Although most people immediately think of the generative solutions offered by companies such as OpenAI online when the term AI is thrown around, it’s no longer solely distinct intelligence web tooling that could provide a threat to your data. Microsoft’s co-pilot offering is now embedded into both operating systems and its well-used and loved 365 business productivity platform.

There are plenty of other applications and integrations to be cognisant around. A simple search engine query returns 408,000,000 results for the term “AI productivity tools”, which represents a considerable surface area to police.

As we know, it is impossible to block everything, and it’s clear there are advantages and legitimate use cases for adopting these tools within your business. Sanctioned tools, or those developed internally will certainly increase productivity and see welcome advances in business operations.

WHAT FRAMEWORKS, REGULATIONS AND LEGISLATION EXIST?

All of the usual regulations apply to the use of data within AI tools such as, GDPR, PCI-DSS and ISO27001 meaning any PII provided to an AI algorithm may need to be reported within ROPA or a DPIA. However, knowing if this has occurred is often easier said than done when it comes to algorithms and public platforms residing outside of the corporate environment.

More recently introduced is the Artificial Intelligence Act, voted in by the European Union and expected to come into force during Q2 of 2024. The Act is aimed at governing the use and impact of Artificial Intelligence (AI) within its member states. This regulation is designed to manage the risks associated with AI systems and ensure they are used ethically and responsibly. The Act classifies AI systems according to their risk levels, ranging from minimal to unacceptable risk, and sets forth strict requirements for highrisk applications, including transparency, data governance, and human oversight.

The Act also outlines prohibitions on certain uses of AI, such as those that manipulate human behaviour to circumvent users’ free will, and applications that use ‘real-time’ remote biometric identification systems in publicly accessible spaces.

In addition to the EU AI Act, there are a number of other regulations that will serve to govern the use of this emerging technology.

Here are some common frameworks and regulations and a few words about how they cover elements of AI:

- General Data Protection Regulation (GDPR): Sets out principles for data protection and privacy which are crucial when deploying AI systems that process personal data.

- Data Protection Act 2018: Adds specific provisions for automated decision-making, profiling, and data protection standards that need to be upheld in AI systems.

- Ethics Guidelines for Trustworthy AI: Not legally binding, these can be used as a framework to ensure that AI deployments are ethical and align with broader societal values and legal standards.

- ICO Guidance on AI and Data Protection: Specific guidance on how to comply with data protection laws when using AI. Helping to mitigate risks, such as bias.

- ISO/IEC 27001: Can be applied to the security measures surrounding AI deployments, particularly in protecting data and ensuring its integrity.

HOW DO YOU MITIGATE RISK FROM ARTIFICIAL INTELLIGENCE?

Follow best practice; Scope AI platforms into your existing data protection policies and communicate this to your users. Ideally, back this up with technology to nullify the threat, for example, if your policy stops employees from uploading sensitive data into Google Bard then invest in technology to ensure they can’t. It’s often too restrictive to block all aspects of these tools and there’s good reason for not doing so. It is important to understand what would be useful and give them the ability to use the tools with the right data, whilst preventing the wrong data from being entered.

Be proactive; Information Security Teams need to understand how the data inserted into these platforms is stored and used. Only with this information can they decide if this is an acceptable risk to the business. As AI technology evolves and becomes increasingly integrated and available for use in our everyday tools and applications, the need for this to become a constant process is inevitable.

AWARENESS AND EDUCATION

The AI trend will most certainly drive a further wave of shadow IT problems as well-meaning employees try to cut corners and increase their productivity through the use of AI tools to alter and produce data. Helping them understand what data is safe to expose in these tools and deploying options to prevent the exposure of unacceptable data is the challenge for IT and security teams going forward.

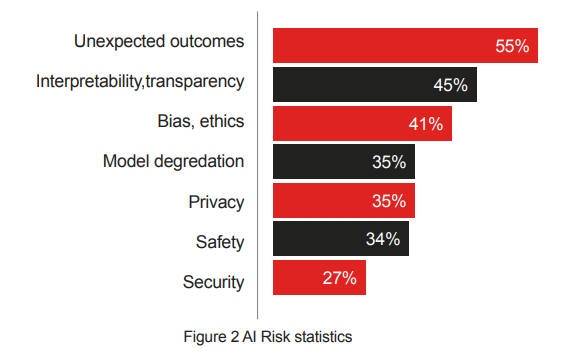

Figure 2 shows the results of a survey conducted around AI risks. Alarmingly, security and privacy are nowhere near the top. For a technology that effectively remembers what it’s seen to produce future results, this is a major red flag – especially as the race to introduce it across organisations continues at a rapid pace.

To police the data fed to AI tools, an organisation must first understand what data it holds and processes. This data should be classified and then, based on that classification, organisations can decide which data is ‘Artificial Intelligence platform approved’. This should be delivered through a DLP (Data Loss Prevention) capability of some description that provides the most coverage possible, not just for the ChatGPTs and Google Geminis of the world, but a tool with direct integration into service provider APIs you’re using such as a SASE or CASB.

If you would like to discuss how AI could impact your organisations data, we are here to help. Contact us at hello@handd.co.uk or request a call back to ensure your project meets all privacy requirements and exceeds expectations.

Learn more in our Guide ‘Data Security and Data Protection in 2024‘, where focus on more common projects, initiatives and areas that we feel need particular attention throughout 2024 and beyond.